Scores

Scores are a crucial part of developing and improving your LLM application or agent.

There are multiple categories of Scores:

- End-User Feedback - Explicit: For example a thumbs-up or down to a chatbot’s answer. This is automatically enabled when you have made a chatbot with Chainlit. Otherwise, you can use the score API in your application.

- End-User Feedback - Implicit: For example, user conversion to paid offering increases by 15% with new RAG version.

- AI Evaluation: A score generated by an online evaluation done by an AI model. You can define metrics like faithfulness, hallucination, harmfulness etc.

- Human Evaluation: A score given by a human developer, product owner or reviewer. You can define metrics like faithfulness, hallucination, harmfulness etc.

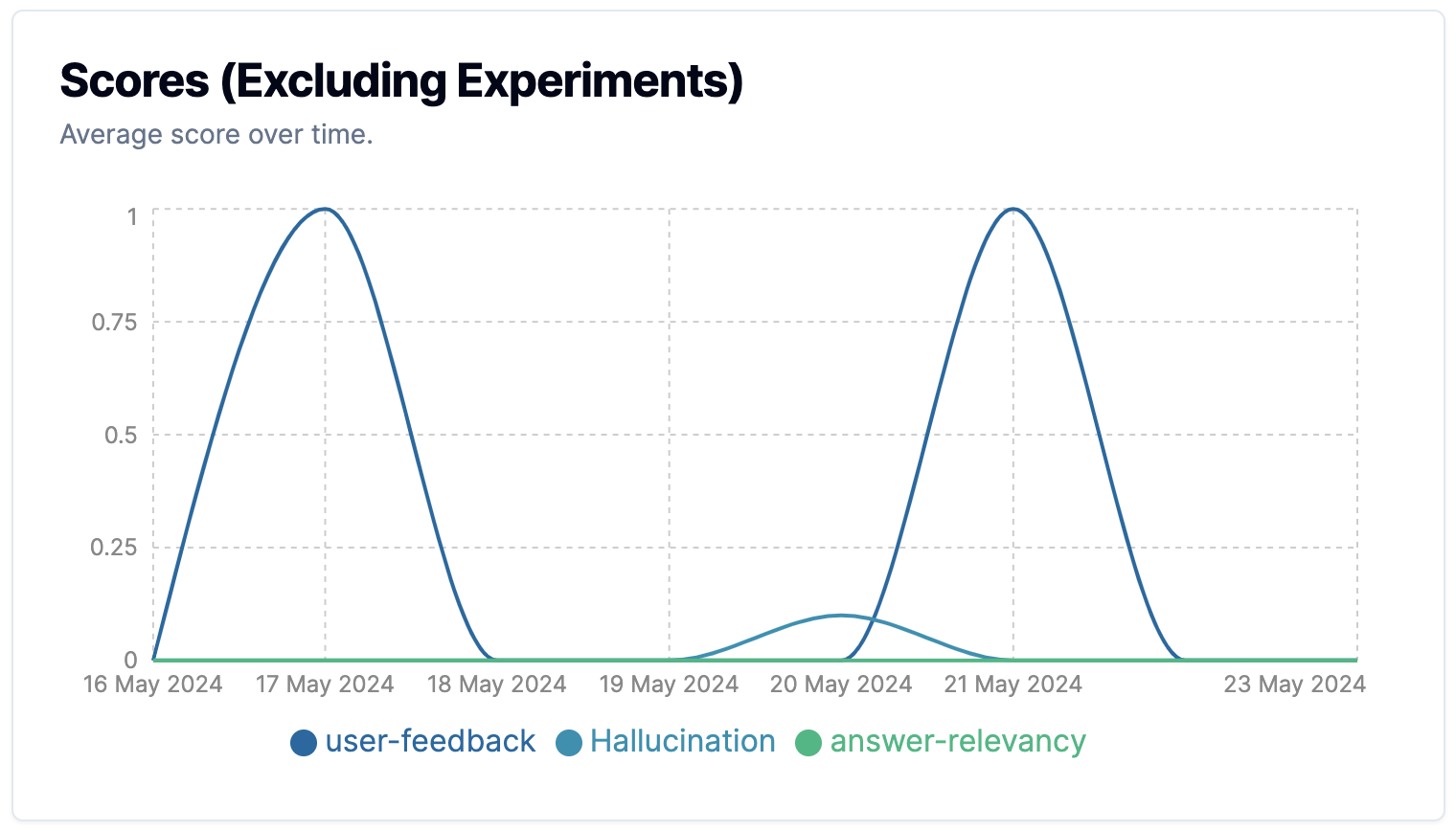

You can get a quick overview over the scores over time in the Dashboard.

Scores on the Literal AI Dashboard

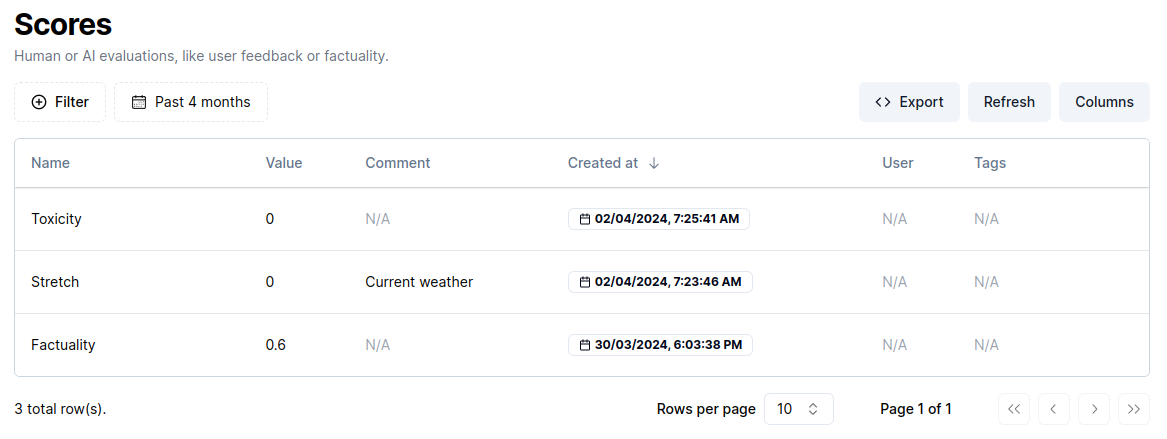

An overview of all the scores given are

Example of Scores collected on Literal AI

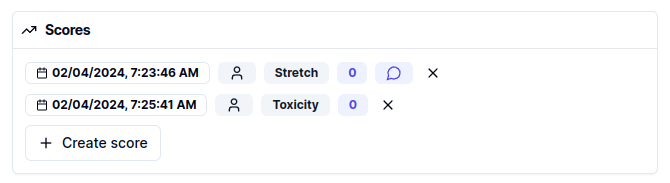

Scores are tied to a Step or a Generation. As such, they appear in their respective info panels:

Example of Scores on a Step

Create a score

From the application

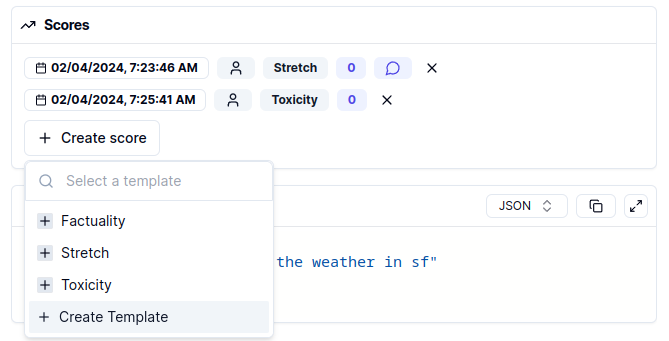

The Literal AI application offers an easy way to manage scores: Score Templates.

To create a Score Template, click on “Create score” and select the “Create Template” option:

Create Template option

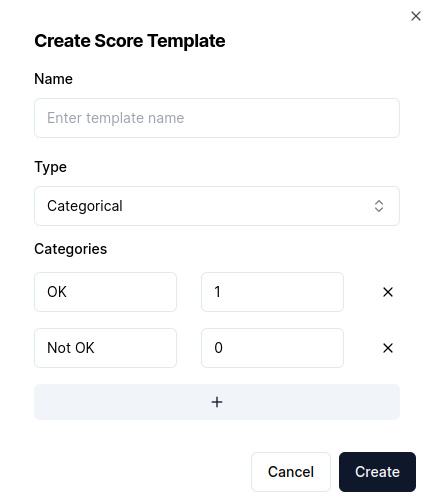

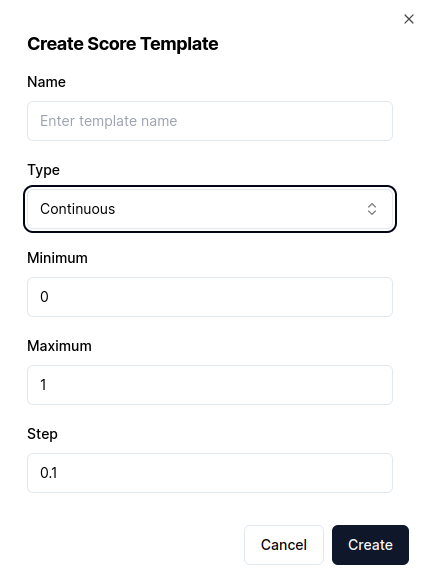

Via Score Templates, admin users can control and expose to users the various types of evaluations allowed from the application. Score Templates come in two flavors: Categorical and Continuous.

Categorical templates let you create a set of categories, each tied to a numeric value:

Create categorical Score Template

Continuous templates offer a minimum and a maximum value which users can then select from to score.

Create continuous Score Template

From a Step or a Generation, admins can then score by selecting a template and filling in the required form fields.

Scores can be deleted individually from a Step or a Generation via their delete button.

By default, any admin user may create a score template. Deletion is left in the hands of project owners, from the Settings page.

Programmatically

The SDKs provide score creation APIs with all fields exposed. Scores must be tied either to a Step or a Generation object.

The example below highlights how you can receive human feedback on a

FastAPI server by calling the create_score API in Python.

import os

from fastapi import FastAPI

from literalai import LiteralClient

literal_client = LiteralClient(api_key=os.getenv("LITERAL_API_KEY"))

app = FastAPI()

@app.post("/feedback/")

def receive_human_feedback():

# Create a score on the `Step` the user gave feedback for.

return literal_client.api.create_score(

type="HUMAN",

name="user-feedback",

value=0.9,

comment="This was good",

step_id="309a6a10-345b-4fd3-bbba-1dd1b435656c",

)

receive_human_feedback()