Integrations

OpenAI

You can use the Literal AI platform to instrument OpenAI API calls. This allows you to track and monitor the usage of the OpenAI API in your application and replay them in the Prompt Playground.

The OpenAI instrumentation supports completions, chat completions, and image generation. It handles both streaming and synchronous responses.

Instrumenting OpenAI API calls

With Threads and Steps

You can use Threads and Steps on top of the OpenAI API to create structured and organized logs.

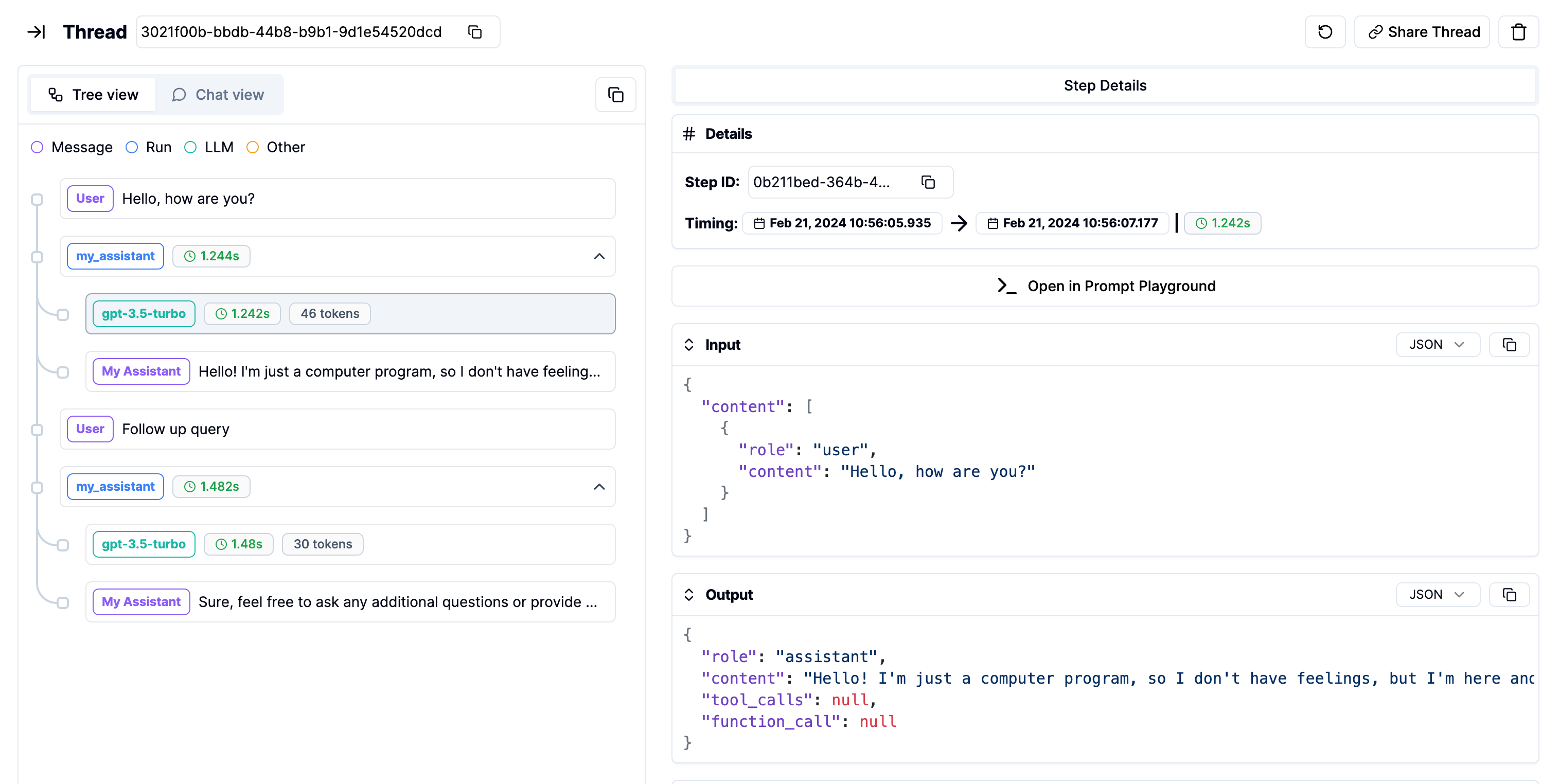

Which will produce the following logs in the Threads page:

OpenAI thread

Adding Tags

You can add Tags to the Generations and Steps created via the instrumentation.

Was this page helpful?